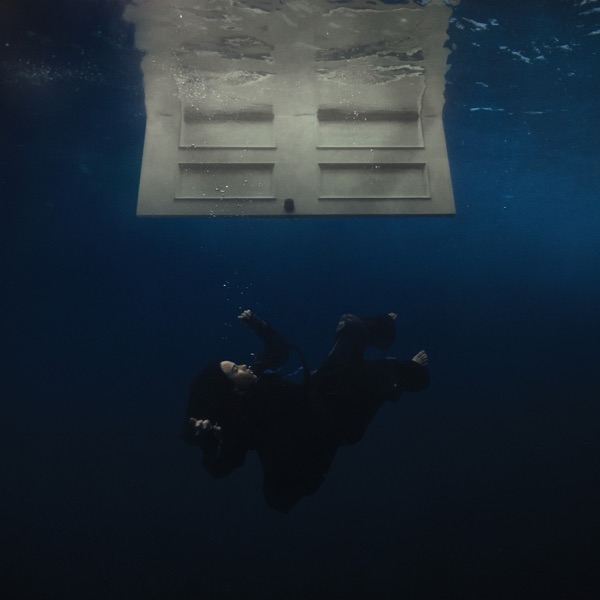

TikTok is refusing to mention how many videos were taken down or restricted.

Social media companies need to "get serious" about asking for ID to prevent harmful content being shown to teenagers.

Speaking to KFM, David McNamara, Managing Director at CommSec Cyber Security, said social media companies need act fast to stop youths being exposed to suicide-related content on their platforms.

He said people often share content without understanding the "consequences and how it’s hurting other people.”

He made an urgent call for TikTok to change its algorithm.

He said similar to banking apps, social media companies needs to start asking for ID.

His comments follow reports on Primetime that children as young as 13 are creating and viewing suicidal content on TikTok.

TikTok is refusing to mention how many videos were taken down or restricted.

McNamara said their response is not good enough - “by a long shot.”

Meanwhile, Facebook and Instagram are being investigated over concerns they lead to addictive behaviour in children.

The European Commission will look at systems which create so-called "rabbit hole" effects.

Meta, which owns both platforms, says it has a number of online tools to protect children.

Gardaí Appeal For Information On Teenager Bridget McDonnell (17) Missing From Athy

Gardaí Appeal For Information On Teenager Bridget McDonnell (17) Missing From Athy

Council Issue Update Regarding Ongoing Flooding Risk And Yellow Rainfall Warning For Kildare

Council Issue Update Regarding Ongoing Flooding Risk And Yellow Rainfall Warning For Kildare

Grey/Silver Kia Sportage At Centre Of Investigation Into Two Connected Burglaries In Clane

Grey/Silver Kia Sportage At Centre Of Investigation Into Two Connected Burglaries In Clane

Status Yellow Rain Warning Issued For Kildare As Flood Advisory Remains In Place

Status Yellow Rain Warning Issued For Kildare As Flood Advisory Remains In Place

Almost Half Of Vehicles Detained Over St Brigid’s Weekend Had No Insurance

Almost Half Of Vehicles Detained Over St Brigid’s Weekend Had No Insurance

Government Moves To End Use Of Colleges As "Back Door" To Jobs Market For International Students

Government Moves To End Use Of Colleges As "Back Door" To Jobs Market For International Students

Pregnant Horse Dies After Collapsing Twice During Illegal Sulky Drive, Says Kildare Animal Rescue

Pregnant Horse Dies After Collapsing Twice During Illegal Sulky Drive, Says Kildare Animal Rescue